Azure Data Factory & DevOps – Advanced YAML Pipelines

In this post I would like to touch again modern Azure DevOps YAML Pipelines. It will be an extension of the previous one – Azure Data Factory & DevOps – YAML Pipelines. After it was published, YAML Pipelines moved from a preview to a general availability state. So, it is a good time to talk on what next and cover more advanced topics, such as pipeline templates, parameters, stages, and deployment jobs.

Prerequisites

Source Control enabled instance of ADF. The development environment should have already a source control integration configured. This aspect was illustrated previously in a post: Azure Data Factory & DevOps – Integration with a Source Control

Basic understanding of YAML Pipelines. Especially, in a combination with ADF. The content of Azure Data Factory & DevOps – YAML Pipelines is a nice starting point. I will expand the initial idea and refactor the code of that post.

The next steps

While the previous post shows basic scenarios of YAML it has still a “one-file” implementation, without any code re-use, approvals, and splits into stages. These issues will be addressed one-by-one now.

Parameterized templates

The primary purpose of the Azure DevOps YAML Pipeline templates is a code re-usability. Think of them as a mimic of stored procedures in SQL Server. The parametrized template acts similarly to a stored procedure with parameters. There is another function though – the template can be set as a mandatory one to be inherited so it can act as the security or control protection of what is allowed in the inherited pipeline. In this post I will focus only on a code re-usage. Below is an example of how one pipeline “re-uses” the same template twice with different parameters – to deploy ADF to staging and production environments:

stages:

# Deploy to staging

- template: stages\deploy.yaml

parameters:

Environment: 'stg'

# Deploy to production

- template: stages\deploy.yaml

parameters:

Environment: 'prd'

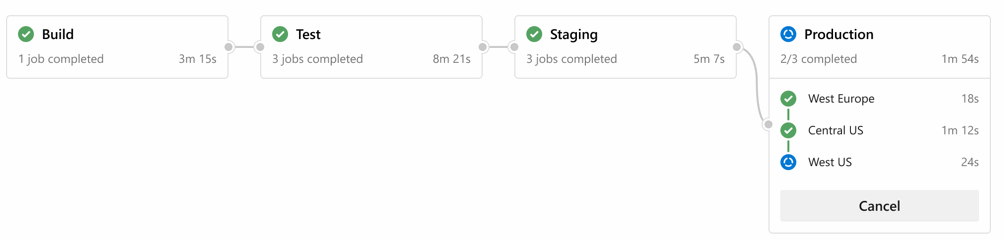

Stages

Stages act like logical containers for pipeline jobs. For instance, a build stage triggers jobs related to a build process, the deployment stage consists of some deployment jobs and so on. Stages also needed in case if the pipeline must be paused during the execution for external checks. Think of approvals, artifact evaluations, REST API calls, etc.

Deployment Jobs

Deployment jobs are special types of regular jobs. They provide a few extra benefits:

- various deployment strategies.

- maintain specific deployment history for further auditing.

- mapped to “environments”, this unblocks possibilities for setting approvals.

The code transformation

I will start with a refactoring of the initial code that was used in a previous blog post. That time it was single-file YAML pipeline main.yaml, which kept all logic. It will be split into three smaller files:

File main.yaml will have the role of an orchestrator. A few extra files in folder stages –build.yaml and deploy.yaml will be new templates that will implement the logic of a certain stage.

The starting point or main.yaml

The main.yaml contains a trigger configuration, internal variables, and calls of stage-specific templates.

# Batching trigger set to run only on adf_publish branch

# build folder is to be excluded

trigger:

batch: true

branches:

include:

- adf_publish

exclude:

- master

paths:

exclude:

- build/*

include:

- "*"

variables:

ProductName: "azdo-adv-yaml"

Subscription: "Visual Studio Enterprise Subscription(your_subscription_id)"

# The build agent is based on Windows OS.

pool:

vmImage: "windows-latest"

stages:

# Build ------------------------------------------------------------

- template: stages\build.yaml

parameters:

displayName: 'Build a code'

# Deploy ------------------------------------------------------------

# Deploy to staging

- template: stages\deploy.yaml

parameters:

Environment: 'stg'

ProductName: '${{ variables.ProductName }}'

Subscription: '${{ variables.Subscription }}'

# Deploy to production

- template: stages\deploy.yaml

parameters:

Environment: 'prd'

ProductName: '${{ variables.ProductName }}'

Subscription: '${{ variables.Subscription }}'

The build stage – build.yaml

This is a first template that has a simple task – prepare and publish arm templates for further deployment. Please note that it references ARM files of a git folder resources\arm\blank-adf. That folder and files are not by default in a branch adf_publish and have to be added manually. More details about why this needed in a previous post.

parameters:

- name: displayName

type: string

default: 'Build'

stages:

- stage: Build

displayName: 'Build Stage'

jobs:

- job: Build

displayName: ${{ parameters.displayName }}

steps:

# Step 1: Checkout code into a local folder src

- checkout: self

path: src

# Step 2a: Find arm json files for a deployment of blank adf in a src and copy them into the artifact staging folder

- task: CopyFiles@2

inputs:

SourceFolder: '$(Pipeline.Workspace)\src\resources\arm\blank-adf'

Contents: '**/*.json'

TargetFolder: '$(build.artifactstagingdirectory)\arm'

CleanTargetFolder: true

OverWrite: true

displayName: 'Extra ARM - Blank ADF Service'

enabled: true

# Step 2b: Find other adf files, which will deploy pipelines, datasets and so on in a folder adf_publish and copy them into the artifact folder

- task: CopyFiles@2

inputs:

SourceFolder: '$(Pipeline.Workspace)\src'

Contents: '**/*ForFactory.json'

TargetFolder: '$(build.artifactstagingdirectory)\adf_publish'

CleanTargetFolder: true

OverWrite: true

flattenFolders: true

displayName: 'Extract ARM - ADF Pipelines'

# Step 3: Publish the artifacts

- publish: $(build.artifactstagingdirectory)

artifact: drop

displayName: 'Publish artifacts'

There are two CopyFiles tasks. The first one copies ARM files that needed to create an empty Data Factory instance. The other “copy” task extracts ARM files that describe the state of Data Factory objects

Deployment stage – Deploy.yaml

The other template is to perform a deployment of extracted arm files. This template referenced twice in main.yaml – to run deployment in staging and production environments.

parameters:

- name: Environment

default: 'stg'

- name: Subscription

default: 'yourSubscription'

- name: ProductName

default: 'prd1'

stages:

- stage: DeployRing_${{ parameters.Environment }}

displayName: 'Deploy to ${{ parameters.Environment }} Ring'

jobs:

- deployment: DeployADF

displayName: Deploy ADF

environment: 'env-adf-${{ parameters.ProductName }}-${{ parameters.Environment }}'

variables:

Subscription: '${{ parameters.Subscription }}'

Environment: '${{ parameters.Environment }}'

ProductName: '${{ parameters.ProductName }}'

strategy:

runOnce:

deploy:

steps:

# Step 1: Download the code published by build stage

- download: current

artifact: drop

# Step 2: Deploy the instance of Azure Data Factory. This task is needed on the first deployment of the environment.

# If, ADF instance already exists, it will do nothing.

- task: AzureResourceManagerTemplateDeployment@3

inputs:

deploymentScope: 'Resource Group'

azureResourceManagerConnection: '$(Subscription)'

action: 'Create Or Update Resource Group'

resourceGroupName: 'rg-$(ProductName)-$(Environment)'

location: 'West Europe'

templateLocation: 'Linked artifact'

csmFile: '$(Pipeline.Workspace)\drop\arm\template.json'

csmParametersFile: '$(Pipeline.Workspace)\drop\arm\parameters.json'

overrideParameters: '-name "df-$(ProductName)-$(Environment)"'

deploymentMode: 'Incremental'

displayName: Deploy ADF Service

enabled: true

# Step 3: Deploy Azure Data Factory Objects like pipelines, dataflows using ARM templates that ADF generate during each publish event

- task: AzureResourceManagerTemplateDeployment@3

inputs:

deploymentScope: 'Resource Group'

azureResourceManagerConnection: '$(Subscription)'

action: 'Create Or Update Resource Group'

resourceGroupName: 'rg-$(ProductName)-$(Environment)'

location: 'West Europe'

templateLocation: 'Linked artifact'

csmFile: '$(Pipeline.Workspace)\drop\adf_publish\ARMTemplateForFactory.json'

csmParametersFile: '$(Pipeline.Workspace)\drop\adf_publish\ARMTemplateParametersForFactory.json'

overrideParameters: '-factoryName "df-$(ProductName)-$(Environment)"'

deploymentMode: 'Incremental'

displayName: Deploy ADF Pipelines

enabled: true

The deployment job downloads ARM files, creates an instance of ADF if needed and then perform a final deployment of objects like datasets, pipelines, linked servers, and so on.

The source code

The source code can be found on my GitHub repository: adf-yaml-advanced

Final words

This post is about more advanced usage of Azure DevOps YAML pipelines together with Azure Data Factory. It shows some benefits of splitting the yaml code into smaller chunks also it shows how stages and more powerful deployment jobs can be used.

Many thanks for reading.