Azure Data Factory & DevOps – YAML Pipelines

This post is about configuring Azure DevOps YAML pipelines as the CI/CD process for the Azure Data Factory. It provides rational points on why YAML can be a preferable alternative to Classic pipelines. Also, it demonstrates a basic scenario – a YAML pipeline that runs an automatic deployment of the Azure Data Factory objects to a staging environment.

Prerequisites

- Source Control enabled instance of ADF. The development environment should have already a source control integration configured. This aspect was illustrated previously in a post: Azure Data Factory & DevOps – Integration with a Source Control

Why YAML Pipelines?

We all love the old way of how our CI/CD processes were built in TFS, TFVS or Azure DevOps. This is because of:

- the versatility of easy to use tasks as building blocks

- a clean user interface where the main focus is set to easy a mouse operations

However, Microsoft now calls this type of pipeline as “Classic” and advises switch to the new alternative – YAML pipelines. The very basic YAML pipeline can be a single file and looks this way:

pool: vmImage: 'ubuntu-16.04' jobs: - job: Job A steps: - bash: echo "Echo from Job A" - job: Job B steps: - bash: echo " Echo from Job B"

So, the entire CI/CD process can be declared as a single YAML file. It also can be split into smaller pieces – re-usable templates. This brings some benefits:

- The definition of the pipeline is stored together with the codebase and it versioned in your source control.

- The changes to the pipeline can break a build process the same way as changes to the codebase. Therefore, peer-reviews, approvals applied also to a pipeline code.

- The complex pipelines can be split into re-usable blocks – templates. The templates conceptually similar to SQL Server’s stored procedures – they can be parameterized and reused.

- Because the pipeline is a YAML code, it offers an easy refactoring process. The common tasks like a search of a string pattern or variable usage become a trivial operation. In a classic pipeline, it requires to walk-through each step in a UI and checks every field.

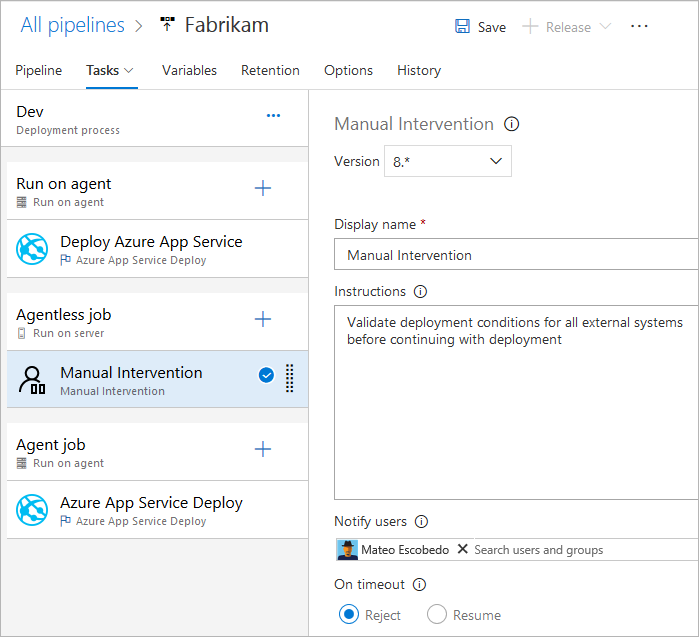

I published recently a step-by-step guide for Classic pipelines: Azure Data Factory & DevOps – Setting-up Continuous Delivery Pipeline. However, it is a time now to look into the alternative.

Continue reading…Azure Data Lake gen2 & SQL Server – Getting started with a PolyBase

Imagine a scenario: your team runs SQL Server boxes, has plenty of experience in T-SQL and sometimes needs to dump results of the queries into a data lake. Or, the data is to be age-out and should be placed to an archival location. Or, yet another one scenario – the interactive querying of parquet files by joining them to local relational tables.

Starting with a release of SQL Server 2016 Microsoft delivered the PolyBase – a solution for such cases. This post is about getting started with this feature and run a few demos: data ingestion and data retrieval.

Prerequisites

- SQL Server 2016 or later with installed PolyBase. More information: Install PolyBase on Windows

- Azure Data Lake Gen2 account.

So, what is the Polybase

Let’s start a theoretical part with the official definition:

PolyBase enables your SQL Server instance to process Transact-SQL queries that read data from external data sources. SQL Server 2016 and higher can access external data in Hadoop and Azure Blob Storage.

What is PolyBase?

With a SQL Server 2019 release, the list of external data sources was expanded to Oracle, Teradata, MongoDB, and ODBC Generic Types. In this post, I will focus on connectivity between SQL Server and HDFS based data lakes, like Azure Data Lake gen2.

PolyBase logically acts as a super-set on top of SQL Server Data Engine:

Azure Data Factory & DevOps – Setting-up Continuous Delivery Pipeline

This is a final post of a DevOps series related to Classic pipelines, and I will touch here well-known practices: Continuous Integration/Continuous Delivery or CI/CD and how they relate to Azure Data Factory. It is a continuation of a one I published earlier: Azure Data Factory & DevOps – Integration with a Source Control.

Prerequisites

- Pre-configured development, test and production environments. They can be generated and configured by using scripts from those previous two posts.

- Source Control enabled development environment. The development environment should have already a source control integration configured. This aspect was illustrated in a previous post

So, what the term “CI/CD” for ADF development means?

Before going forward to a practical part it worth to walk-through a terminology and understand some limitations of the current implementation of Data Factory v2.

Continuous integration is a coding philosophy and set of practices that drive development teams to implement small changes and check-in code to version control repositories frequently. The technical goal of CI is to establish a consistent and automated way to build, package, and test applications.

What is CI/CD? Continuous integration and continuous delivery explained

Continuous delivery picks up where continuous integration ends. CD automates the delivery of applications to selected infrastructure environments. Most teams work with multiple environments other than the production, such as development and testing environments, and CD ensures there is an automated way to push code changes to them.

Azure Data Factory v2 currently has limited support for the Continuous Integration (CI) concept. Of course, developers can still perform frequent code check-ins. However, there is no way to configure automated builds and packaging of the data factory pipelines. This is because the collaboration branch (normally it is master) stores JSON definitions of objects, like pipelines, datasets, linked services. However, such objects cannot be directly deployed to data factory service before they are rebuilt to the ARM template.

Continue reading…Azure Data Factory & DevOps – Integration with a Source Control

A few recently published posts – Automated Deployment via Azure CLI and Post-Deployment Configuration via Azure CLI are about getting blank environments deployed and configured automatically and consistently. It is time now to integrate a development stage with Azure DevOps. In this post, I will make a step by step guide of how to add a Data Factory instance to a source control system. The next one will cover more Continuous Integration and Continuous Delivery or CI/CD.

Prerequisites

- Blank development, test and production environments. They can be generated and configured by using scripts from those previous two posts. So the whole landscape will eventually have a look similar to:

Why bother with source control and Azure DevOps?

The Data Factory currently supports two editing modes: Live and GIT integrated. While LIVE editing is an easy start and available by default, the other alternative has various advantages:

- Source Control Integration and CI/CD:

- Ability to track/audit changes.

- Ability to revert changes that introduced bugs.

- Release pipelines with automatic triggers as soon as changes published to a development stage.

- Partial Saves:

- With Git integration, you can continue saving your changes incrementally, and publish to the factory only when you are ready. Git acts as a staging place for your work.

- Collaboration and Control:

- A code review process.

- Only certain people in the team allowed to “Publish” the changes to the factory and trigger an automated delivery to test / production

- Showing diffs:

- shows all resources/entities that got modified/added/deleted since the last time you published to your factory.

Azure Data Factory & DevOps – Post Deployment Configuration via Azure CLI

Previous post – Automated Deployment via Azure CLI is an example of how to get a brand-new environment by firing a script. However, it comes pretty blankly and unconfigured. In this post, I would like to talk about an automated configuration of Azure Data Factory deployments. At least a few things can be scripted in most of the cases: creation of storage containers, upload of the sample data, creation of secrets in a Key Vault and granting access of a Data Factory to them.

Prerequisites

- Azure CLI. This is a modern cross-platform command-line tool to manage Azure services. It comes to a replacement to the older library AzureRM. Read more: Azure PowerShell – Cross-platform “Az” module replacing “AzureRM”.

- MoviesDB.csv. This flat dataset is often referenced in data engineering training. It is downloadable from a Github repository

Coding a PowerShell script

Step 1. A naming convention and resource names

This block is to define the name of resources as variables that are later going to be used by configuration commands.

param([String]$EnvironmentName = "adf-devops2020",`

[String]$Stage = "dev",`

[String]$Location = "westeurope"`

)

$OutputFormat = "table" # other options: json | jsonc | yaml | tsv

# internal: assign resource names

$ResourceGroupName = "rg-$EnvironmentName-$Stage"

$ADFName = "adf-$EnvironmentName-$Stage"

$KeyVaultName ="kv-$EnvironmentName-$Stage"

$StorageName = "adls$EnvironmentName$Stage".Replace("-","")

Continue reading…